Recently I’ve been migrating quite a few M queries from .pbix files to dataflows. In this blog post, I’m sharing a few things I learned in the process.

Dataflows are Power Query queries designed online with the output stored in Azure Data Lake Storage Gen2 as CSV files. Therefore, you need to be aware of a few things when working with dataflows. Some of the issues I encountered when working with dataflows, such as poor UI, are likely to be only temporary, and I’m not going to list them all because they will probably go away soon.

Other things are probably inherent to storing the data in CSV files, and this is something you need to keep in mind when designing your dataflows. Here are the issues I’m covering in this blog post:

- Empty strings vs nulls

- Compression

- Existing table relationships

- Data types

Empty strings vs nulls

Power Query does differentiate between nulls and empty strings in text columns. This is how they appear in the dataflows Power Query editor:

![]()

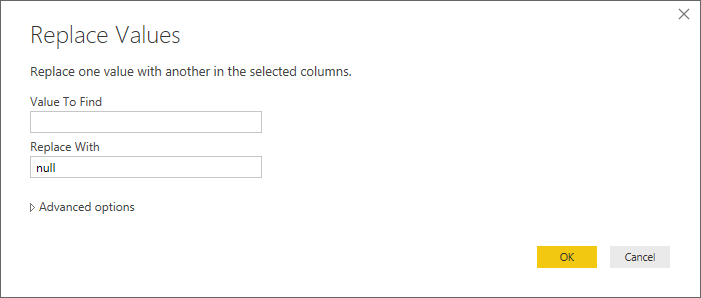

The problem is that both are stored in the same way when you use dataflows: you always get empty strings when you work with text. This is what you see when you get the data in Power BI Desktop from the dataflow:

![]()

Implications

When loaded, nulls in M become BLANK values in DAX. Getting empty strings instead of nulls may lead to unexpected results, because different functions in DAX treat empty strings and blanks differently.

Tips

Personally, I find nulls/blanks preferable to empty strings. If you are like me, you will need to replace empty strings with nulls when loading data from your dataflows:

Compression

If you are a long-time user of Power BI, you’ll know it is very good at compressing the data, because its engine uses a columnar database called VertiPaq behind the scenes.

If you have a table with a single column and the same value is repeated a million times, the size of your .pbix file will be tiny — about 25 KB, to be more precise. The actual value stored does not matter — the size will be the same whether it’s “1” repeated a million times, or “This is a super duper long phrase designed to surprise you” repeated a million times. This is because VertiPaq uses smart algorithms when compressing its data — this topic is outside of scope of this blog post.

On the other hand, the size of the CSV that contains the same value repeated a million times is directly proportional to the length of the repeated value, because there is no compression at all.

Implications

When you use dataflows, you need to be aware that CSVs are compressed differently, if at all. I am not an expert on the subject — all I know is that even if data is compressed, the compression is nowhere near as good as Power BI’s.

Specifically, a dataflow that uses long text keys, such as GUIDs, will take much more space than a dataflow with integer keys. Even if you are not using your own storage for dataflows, this will still affect you: it will take longer to refresh your dataset, because the file size to read (CSV) will be larger. The dataflow itself will likely take longer to refresh (write) as well.

Tips

As much as possible, try to shorten the values you are using. For example, if you have a Logical type column with true and false values, save them as integers instead — 1 for true and 0 for false. This will shave off three or four bytes per value, because true and false values are stored exactly like that — true and false, taking four or five bytes. When reading your dataflow in Power BI Desktop, set the data type to Logical — Power BI will correctly convert the values from 1 and 0 to true and false, respectively, on the fly.

Also, don’t be surprised if your .pbix file size grows in size after you switch to dataflows. One of my .pbix files was 150 MB before using a dataflow; afterwards, it became 200 MB with the same data. The data was identical, and I still don’t know why there was such a massive difference. While I could try sorting my dataflow to improve compression, this is not really an option when your dataflow CSV file is several gigabytes in size.

From my observations, Power BI Desktop reads a portion of a dataflow first, so that it can use its heuristics to determine the best sorting order for compression. My original query was from a database, so perhaps Power BI used to get a higher quality sample and made better choices when sorting data — hopefully in the future we will be able to change the default settings and let Power BI spend more time figuring out the optimal sort order, just like we can do in Analysis Services today.

Existing table relationships

If you are replacing an existing Power Query query with a dataflow, be aware that doing so may break the existing relationships.

Implications

You should not assume that simply replacing your query with a dataflow will be okay. Even if you maintain the same column names and types, the relationships may still break, and you may still need to recreate them. I don’t know why some survive and some don’t — it would be great if this process was predictable.

Tips

Write down your list of relationships and check if it’s intact after updating your queries. To simplify the task, you can use VertiPaq Analyzer, which has a dedicated sheet that lists all relationships.

Data types

This is an issue that I hope will go away. Currently, there is no data type parity between Power Query in Power BI Desktop and Power Query in dataflows. Specifically,

- Fixed decimal number is converted into Double

- Date is converted to DateTime

- Time is also converted to DateTime

Implications

The issue with Fixed decimal number is a mere inconvenience, because the numbers are stored with four decimal places anyway, so all you need to do is set the data type when loading the data from dataflow.

Interestingly, if you download the JSON schema of your dataflow, Fixed decimal number values will be “double” data type, and regular Decimal number values will be “decimal”. Double is a legitimate data type in Power Query similar to Decimal, so it’s no surprise that Power BI Desktop does not read it as Fixed decimal number.

Another interesting titbit is that the Fixed decimal number data type is called Currency in dataflows — the old name the data type used to have in Power Query some time ago.

Dates without times are stored as dates too, which is efficient. Again, you just need to set the correct data type when loading the data in Power BI Desktop.

Times are efficiently stored without dates. You need to set the data type to Time when loading the data — otherwise you will get times of the current date, like 22/01/2019 12:30 AM.

If you find the lack of parity annoying, please vote for the idea on the Power BI Ideas website: Dataflows data types parity with Power Query.

Tips

When connecting to your dataflow, ensure all the data types are set exactly as you expect them to be. Don’t assume that just because you set the data types correctly in Power BI service, they will be loaded correctly.

Conclusion

I really like dataflows. I am sure Microsoft will soon close the gap between Power Query Editor in Power BI Desktop and Power BI service, and if you keep in mind that dataflows data is stored as CSVs with its nuances, you can avoid many issues.