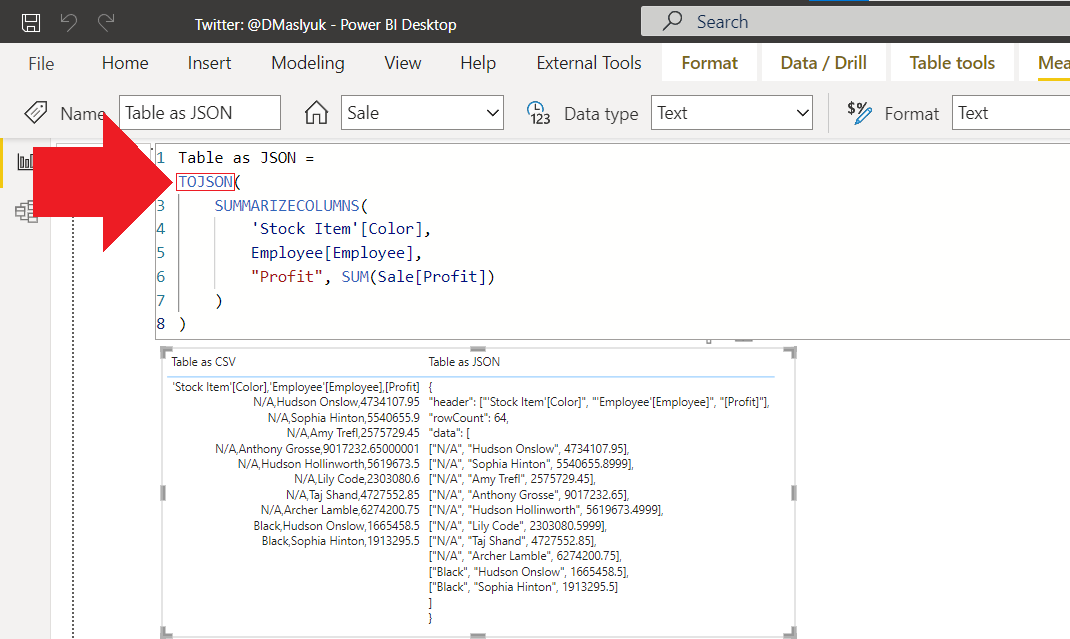

In October 2022, two new DAX functions appeared in the Power BI service: TOCSV and TOJSON. In this blog post, I’m showing a few use cases.

When I posted about the functions, several people asked why they would be useful:

Fair questions. While I don’t know what the “official” use case is, I’m going to show a few.

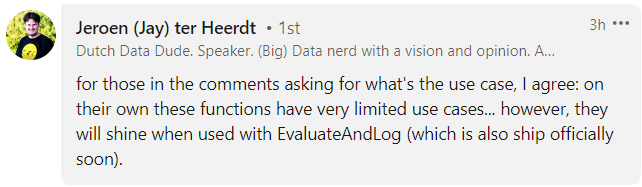

Update 26 October 2022: Jeroen ter Heerdt from Microsoft says TOCSV and TOJSON are meant to be paired with EVALUATEANDLOG:

Read on for other use cases.

Debugging DAX

It’s a well-known fact that measures in DAX can only return scalar values. What if your measure contains some tables, and you want to debug your measure, stepping through variables, some of which may be tables? Then you had to use DAX Studio or create calculated tables… Until now. Bernat Agulló Roselló‘s idea was to use TOCSV for debugging purposes:

Indeed, since TOCSV will be slightly more readable than JSON to a human eye, you can use TOCSV to “see” intermediate tables in your measures.

Overcoming the table limit in Power Automate

Daryl Lynch thought of Power Automate:

In Power Automate, you can use the Run a query against a dataset (also known as Execute Queries) action to get data from your Power BI datasets. Even though DAX queries allow you to have more than one EVALUATE statement and get more than one table as a result, Execute Queries currently only returns the first table.

By using TOCSV or TOJSON, you can combine different tables in one query, even if the tables are heterogeneous. In the Wide World Importers dataset, the following query will give you two tables in one query, which you can then split later in Power Automate.

EVALUATE

{

TOJSON(Employee),

TOJSON(Customer)

}The benefit is that you’ll send fewer queries to Power BI this way.

Overcoming (some) limitations of Power BI REST API

The Execute Queries Power BI REST API call is limited to “maximum of 100,000 rows or 1,000,000 values per query (whichever is hit first)”. Since TOCSV or TOJSON will return just one value (albeit long), you’ll be well within limits. I tested this, and I managed to get over 100,000 rows. I ran the following query against the Wide World Importers dataset:

EVALUATE

{

TOJSON(

SELECTCOLUMNS(

Sale,

"Customer", Sale[Customer Key]

),

10000000

)

}Note two things here:

- I’m using 1000000 as the second parameter in TOJSON, which is the maximum number of rows, because the default is 10. While DAX Guide says “A negative number means all rows are converted”, I couldn’t make a negative number work, so I just hardcoded a number that I knew would be greater than the number of rows. You can’t use expressions, not even 10^6. Hopefully negative numbers will work in the future.

- Even though TOJSON will contain a “table”, it’s still a scalar value, and you need to return a table. One way of doing it is using braces, or you could use the ROW function.

That being said, there’s a limit of 15MB of data per query, so query wisely 🙂